Pioneering Free Tools for AI Artwork

Is a Neighborhood Lawyer Right for You? Examining the Pros and Cons

Key Takeaways

- Less censorship: Local LLMs offer the freedom to discuss thought-provoking topics without the restrictions imposed on public chatbots, allowing for more open conversations.

- Better data privacy: By using a local LLM, all the data generated stays on your computer, ensuring privacy and preventing access by companies running publicly-facing LLMs.

- Offline usage: Local LLMs allow for uninterrupted usage in remote or isolated areas without reliable internet access, providing a valuable tool in such scenarios.

Since the arrival of ChatGPT in November 2022, the term large language model (LLM) has quickly transitioned from a niche term for AI nerds to a buzzword on everyone’s lips. The greatest allure of a local LLM is the ability to replicate the abilities of a chatbot like ChatGPT on your computer without the baggage of a cloud-hosted version.

Arguments exist for and against setting up a local LLM on your computer. We’ll cut the hype and bring you the facts. Should you use a local LLM?

The Pros of Using Local LLMs

Why are people so hyped about setting up their ownlarge language models on their computers? Beyond the hype and bragging rights, what are some practical benefits?

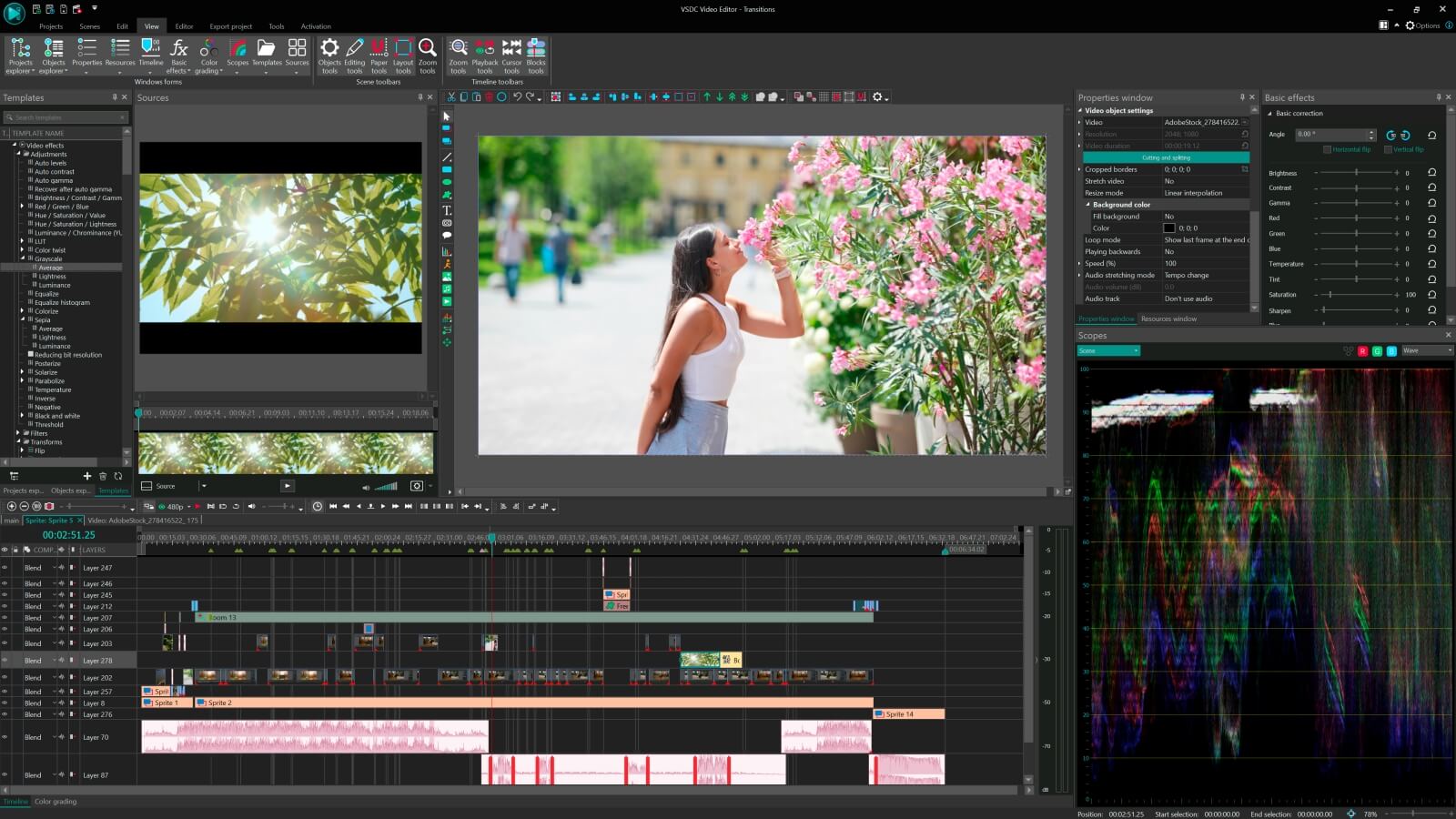

Key features:

• Import from any devices and cams, including GoPro and drones. All formats supported. Сurrently the only free video editor that allows users to export in a new H265/HEVC codec, something essential for those working with 4K and HD.

• Everything for hassle-free basic editing: cut, crop and merge files, add titles and favorite music

• Visual effects, advanced color correction and trendy Instagram-like filters

• All multimedia processing done from one app: video editing capabilities reinforced by a video converter, a screen capture, a video capture, a disc burner and a YouTube uploader

• Non-linear editing: edit several files with simultaneously

• Easy export to social networks: special profiles for YouTube, Facebook, Vimeo, Twitter and Instagram

• High quality export – no conversion quality loss, double export speed even of HD files due to hardware acceleration

• Stabilization tool will turn shaky or jittery footage into a more stable video automatically.

• Essential toolset for professional video editing: blending modes, Mask tool, advanced multiple-color Chroma Key

1. Less Censorship

When ChatGPT and Bing AI first came online, the things both chatbots were willing to say and do were as fascinating as they were alarming. Bing AI acted warm and lovely, like it had emotions. ChatGPT was willing to use curse words if you asked nicely. At the time, both chatbots would even help you make a bomb if you used the right prompts. This might sound like all shades of wrong, but being able to do anything was emblematic of the unrestricted capabilities of the language models that powered them.

Today, bothchatbots have been so tightly censored that they won’t even help you write a fictional crime novel with violent scenes. Some AI chatbots won’t even talk about religion or politics. Although LLMs you can set up locally aren’t entirely censorship-free, many of them will gladly do the thought-provoking things the public-facing chatbots won’t do. So, if you don’t want a robot lecturing you about morality when discussing topics of personal interest, running a local LLM might be the way to go.

2. Better Data Privacy

One of the primary reasons people opt for a local LLM is to ensure that whatever happens on their computer stays on their computer. When you use a local LLM, it’s like having a conversation privately in your living room—no one outside can listen in. Whether you’re experimenting with your credit card details or having sensitive personal conversations with the LLM, all the resulting data is stored only on your computer. The alternative is using publicly-facing LLMs like GPT-4, which gives the companies in charge access to your chat information.

3. Offline Usage

With the internet being widely affordable and accessible, offline access might seem like a trivial reason to use a local LLM. Offline access could become especially critical in remote or isolated locations where internet service is unreliable or unavailable. In such scenarios, a local LLM operating independently of an internet connection becomes a vital tool. It allows you to continue doing whatever you want to do without interruption.

4. Cost Savings

The average price of accessing a capable LLM like GPT-4 or Claude 2 is $20 per month. Although that might not seem like an alarming price, you still get several annoying restrictions for that amount. For instance, with GPT-4, accessed via ChatGPT, you are stuck with a 50-message per three-hour cap. You can only get past those limits byswitching to the ChatGPT Enterprise plan , which could potentially cost thousands of dollars. With a local LLM, once you’ve set up the software, there are no $20 monthly subscription or recurring costs to pay. It’s like buying a car instead of relying on ride-share services. Initially, it’s expensive, but over time, you save money.

5. Better Customization

Publicly available AI chatbots have restricted customization due to security and censorship concerns. With a locally hosted AI assistant, you can fully customize the model for your specific needs. You can train the assistant on proprietary data tailored to your use cases, improving relevance and accuracy. For example, a lawyer could optimize their local AI to generate more precise legal insights. The key benefit is control over customization for your unique requirements.

The Cons of Using Local LLMs

Before you make the switch, there are some downsides to using a local LLM you should consider.

1. Resource Intensive

To run a performant local LLM, you’ll need high-end hardware. Think powerful CPUs, lots of RAM, and likely a dedicated GPU. Don’t expect a $400 budget laptop to provide a good experience. Responses will be painfully slow, especially with larger AI models. It’s like running cutting-edge video games—you need beefy specs for optimal performance. You may even need specialized cooling solutions. The bottom line is that local LLMs require an investment in top-tier hardware to get the speed and responsiveness you enjoy on web-based LLMs (or even improve on that). The computing demands on your end will be significant compared to using web-based services.

2. Slower Responses and Inferior Performance

A common limitation of local LLMs is slower response times. The exact speed depends on the specific AI model and hardware used, but most setups lag behind online services. After experiencing instant responses from ChatGPT, Bard, and others, local LLMs can feel jarringly sluggish. Words slowly trickle out versus being quickly returned. This isn’t universally true, as some local deployments achieve good performance. But average users face a steep drop-off from the snappy web experience. So, prepare for a “culture shock” from fast online systems to slower local equivalents.

In short, unless you’re rocking an absolute top-of-the-line setup (we’re talking AMD Ryzen 5800X3D with an Nvidia RTX 4090 and enough RAM to sink a ship), the overall performance of your local LLM won’t compare to the online generative AI chatbots you’re used to.

3. Complex Setup

Deploying a local LLM is more involved than just signing up for a web-based AI service. With an internet connection, your ChatGPT, Bard, or Bing AI account could be ready to start prompting in minutes. Setting up a full local LLM stack requires downloading frameworks, configuring infrastructure, and integrating various components. For larger models, this complex process can take hours, even with tools that aim to simplify installation. Some bleeding-edge AI systems still require deep technical expertise to get running locally. So, unlike plug-and-play web-based AI models, managing your own AI involves a significant technical and time investment.

FX PRO (Gold Robot + Silver Robot(Basic Package))

FX PRO (Gold Robot + Silver Robot(Basic Package))

4. Limited Knowledge

A lot of local LLMs are stuck in the past. They have limited knowledge of current events. Remember when ChatGPT couldn’t access the internet? When it could only provide answers to questions about events that occurred before September 2021? Yes? Well, similar to early ChatGPT models, locally hosted language models are often trained only on data before a certain cutoff date. As a result, they lack awareness of recent developments after that point.

Additionally, local LLMs can’t access live internet data. This restricts usefulness for real-time queries like stock prices or weather. To enjoy a semblance of real-time data, local LLMs will typically require an additional layer of integration with internet-connected services. Internet access is one of the reasons you mightconsider upgrading to ChatGPT Plus !

Should You Use a Local LLM?

Local large language models provide tempting benefits but also have real downsides to consider before taking the plunge. Less censorship, better privacy, offline access, cost savings, and customization make a compelling case for setting up your LLM locally. However, these benefits come at a price.

With lots of freely available LLMs online, jumping into local LLMs may be like swatting a fly with a sledgehammer–possible but overkill. But remember, if it’s free, you and the data you generate are likely the product. So, there is no definitive right or wrong answer today. Assessing your priorities will determine if now is the right time to make the switch.

- Title: Pioneering Free Tools for AI Artwork

- Author: Larry

- Created at : 2024-08-15 21:53:59

- Updated at : 2024-08-16 21:53:59

- Link: https://tech-hub.techidaily.com/pioneering-free-tools-for-ai-artwork/

- License: This work is licensed under CC BY-NC-SA 4.0.